How Apple now lends a voice to those with speech impairments

3 minute readPublished: Thursday, May 18, 2023 at 3:12 am

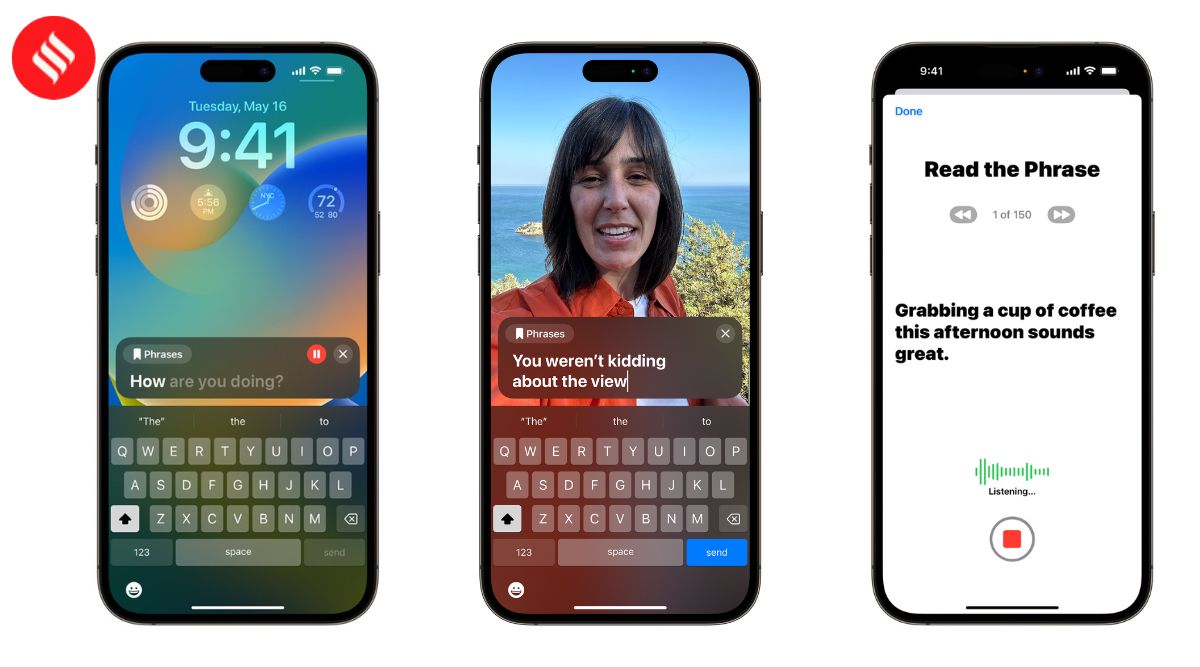

I spent the first two decades of my life worrying about how I will utter my next sentence and what that will lead to. As someone who stammered and stuttered with most words, every conversation, especially with strangers, took a toll on me mentally and physically. And the fear or rebuke made you clam up where you should have been speaking out. And this is where Apple's new Live Speech option would be a saviour for millions with speech impairments, especially children. Live Speech, which works on iPhone, iPad, and Mac, lets users type out what they what to say out loud. It also works within a FaceTime or regular phone call. Users also get the option to save phrases they say more often and this is usually where people stutter more, like when saying their name or giving their address. And it is not that you have a computer-sounding voice always. Apple has also enabled Personal Voice, aimed primarily at those who are at the risk of losing their voice due to conditions like amyotrophic lateral sclerosis. This feature gives users the ability to train the iPhone or iPad to sound like them with just 15 minutes of recorded audio. And this feature, which uses on-device machine learning to keep users information private and secure, integrates seamlessly with Live Speech. These are just a bunch of new accessibility features Apple has announced as part of Global Accessibility Awareness Day. Live Speech and Personal Voice will be available later this year. For now, Apple has launched Assistive Access with its customised experience for calls, Messages, Camera, Photos, and Music. With its high-contrast buttons and large text labels, the feature helps supporters tailor the experience for individuals in need. Assistive Access brings the focus on apps most used by the user but with the ability to customise. The feature has been created based on feedback from people with cognitive disabilities and their trusted supporters. Then there is Point and Speak which uses the Magnifier app to help those with vision disabilities recognise and interact with physical objects that have several text labels. For example, when the app is trained on an electrical appliance like the microwave, Point and Speak will combine inputs from the Camera app, the LiDAR Scanner, with on-device machine learning to announce the text on each button as users move their finger across the keypad. The feature can be used with other Magnifier features such as People Detection, Door Detection, and Image Descriptions. The onboarding experience, which will try to strike a balance between being intuitive and easy to use, will be critical to how users understand and start using the feature. And this experience will also gather user inputs like which apps are important to them so that the experience can be truly customised. Also, the fine-tuning is not a one-time process and users can keep updating their requirements over time. As Sarah Herrlinger, Apples senior director of Global Accessibility Policy and Initiatives, explains in a press note: These groundbreaking features were designed with feedback from members of disability communities every step of the way, to support a diverse set of users and help people connect in new ways. And this is why Apple has focused a lot on getting the details right. For instance, Voice Control now adds phonetic suggestions for text editing so users who type with their voice can choose the right word out of several that might sound alike, like do, due, and dew. For those with physical and motor disabilities, Switch Control can now turn any switch into a virtual game controller. Plus, Text Size is now easier to adjust across Mac apps such as Finder, Messages, Mail, Calendar, and Notes.